What sounds like music to us may just be noise to a macaque monkey.

That’s because a monkey’s brain appears to lack critical circuits that are highly sensitive to a sound’s pitch, a team reported Monday in the journal Nature Neuroscience.

The finding suggests that humans may have developed brain areas that are sensitive to pitch and tone in order to process the sounds associated with speech and music.

“The macaque monkey doesn’t have the hardware,” says Bevil Conway, an investigator at the National Institutes of Health. “The question in my mind is, what are the monkeys hearing when they listen to Tchaikovsky’s Fifth Symphony?”

The study began with a bet between Conway and Sam Norman-Haignere, who was a graduate student at the time.

Norman-Haignere, who is now a postdoctoral researcher at Columbia University, was part of a team that found evidence that the human brain responds to a sound’s pitch.

“I was like, well if you see that and it’s a robust finding you see in humans, we’ll see it in monkeys,” Conway says.

But Norman-Haignere thought monkey brains might be different.

“Honestly, I wasn’t sure,” Norman-Haignere says. “I mean that’s usually a sign of a good experiment, you know, when you don’t know what the outcome is.”

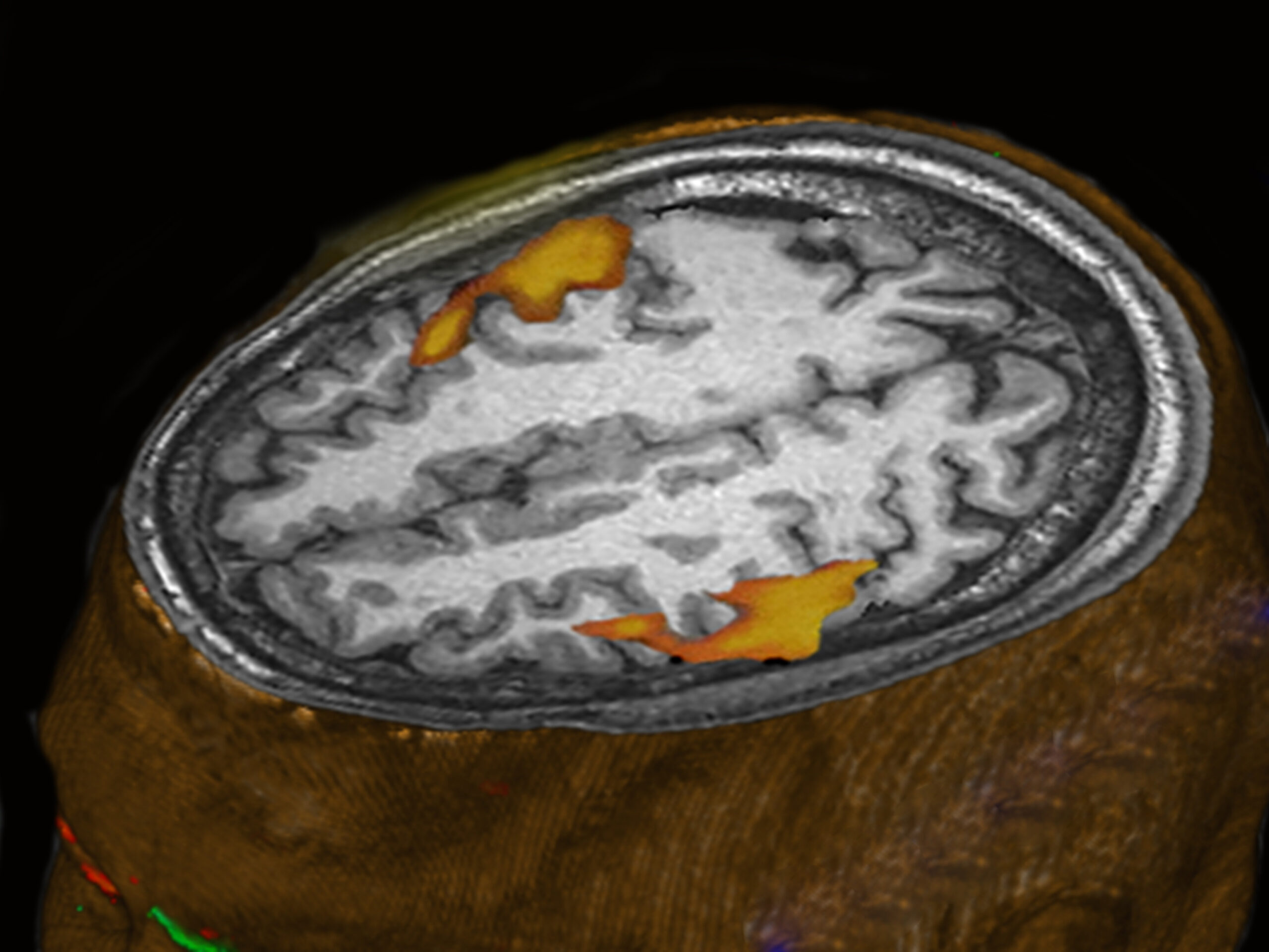

So the two scientists and several colleagues used a special type of MRI to monitor the brains of six people and five macaque monkeys as they listened to a range of sounds through headphones.

Some of the sounds were more like music, where changes in pitch are obvious.

Other sounds were more like noise.

And Conway says it didn’t take long to realize he’d lost his bet.

“In humans you see this beautiful organization, pitch bias, and it’s clear as day,” Conway says. In monkeys, he says, “we see nothing.”

That surprised Conway because his own research had shown that the two species are nearly identical when it comes to processing visual information.

“When I look at something, I’m pretty sure that the monkey is seeing the same thing that I’m seeing,” he says. “But here in the auditory domain it seems fundamentally different.”

The study didn’t try to explain why sounds would be processed differently in a human brain. But one possibility involves our exposure to speech and music.

“Both speech and music are highly complex structured sounds,” Norman-Haignere says, “and it’s totally plausible that the brain has developed regions that are highly tuned to those structures.”

That tuning could be the result of “something in our genetic code that causes those regions to develop the way they are and to be located where they are,” Norman-Haignere says.

Or, he says, it could be that these brain regions develop as children listen to music and speech.

Regardless, subtle changes in pitch and tone seem to be critical when people want to convey emotion,” Conway says.

“You can know whether or not I’m angry or sad or questioning or confused, and you can get almost all of that meaning just from the tone,” he says.

Copyright 2019 NPR. To see more, visit https://www.npr.org.

9(MDAxODM0MDY4MDEyMTY4NDA3MzI3YjkzMw004))

9(MDAxODM0MDY4MDEyMTY4NDA3MzI3YjkzMw004))