Training A Computer To Read Mammograms As Well As A Doctor

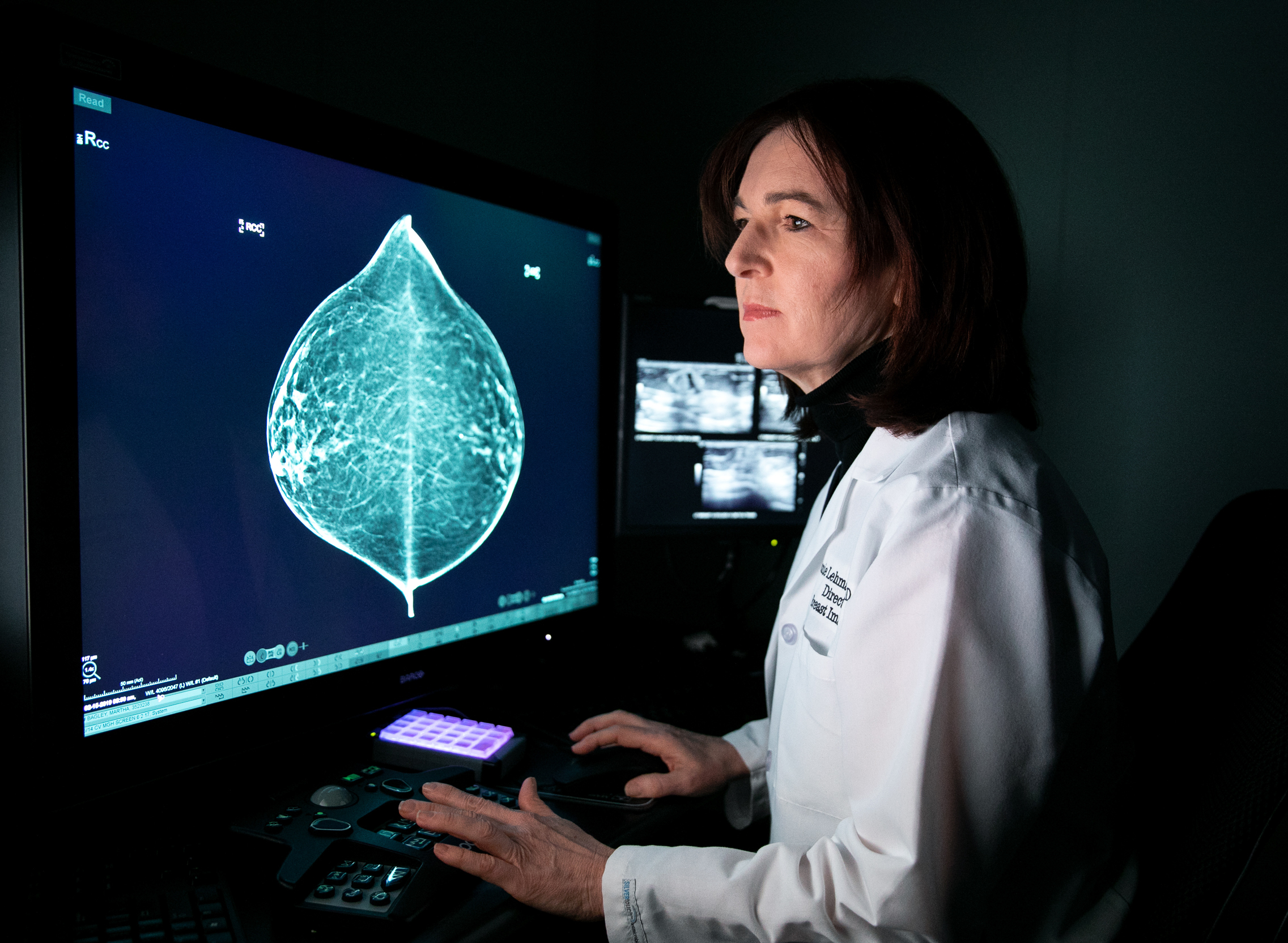

“The optimist in me says in three years we can train this tool to read mammograms as well as an average radiologist,” says Connie Lehman, chief of breast imaging at Massachusetts General Hospital in Boston.

Regina Barzilay teaches one of the most popular computer science classes at the Massachusetts Institute of Technology.

And in her research — at least until five years ago — she looked at how a computer could use machine learning to read and decipher obscure ancient texts.

“This is clearly of no practical use,” she says with a laugh. “But it was really cool, and I was really obsessed about this topic, how machines could do it.”

But in 2014, Barzilay was diagnosed with breast cancer. And that not only disrupted her life, but it led her to rethink her research career. She has landed at the vanguard of a rapidly growing effort to revolutionize mammography and breast cancer management with the use of computer algorithms.

She started down that path after her disease put her into the deep end of the American medical system. She found it baffling.

“I was really surprised how primitive information technology is in the hospitals,” she says. “It almost felt that we were in a different century.”

Questions that seemed answerable were hopelessly out of reach, even though the hospital had plenty of data to work from.

“At every point of my treatment, there would be some point of uncertainty, and I would say, ‘Gosh, I wish we had the technology to solve it,’ ” she says. “So when I was done with the treatment, I started my long journey toward this goal.”

Getting started wasn’t so easy. Barzilay found that the National Cancer Institute wasn’t interested in funding her research on using artificial intelligence to improve breast cancer treatment. Likewise, she says she couldn’t get money out of the National Science Foundation, which funds computer studies. But private foundations ultimately stepped up to get the work rolling.

Barzilay struck up a collaboration with Connie Lehman, a Harvard University radiologist who is chief of breast imaging at Massachusetts General Hospital. We meet in a dim, hushed room where she shows me the progress that she and her colleagues have made in bringing artificial intelligence to one of the most common medical exams in the United States. More than 39 million mammograms are performed annually, according to data from the Food and Drug Administration.

Step one in reading a mammogram is to determine breast density. Lehman’s first collaboration with Barzilay was to develop what’s called a deep-learning algorithm to perform this essential task.

“We’re excited about this because we find there’s a lot of human variation in assessing breast density,” Lehman says, “and so we’ve trained our deep-learning model to assess the density in a much more consistent way.”

Lehman reads a mammogram and assesses the density; then she pushes a button to see what the algorithm concluded. The assessments match.

Next, she toggles back and forth between new breast images and those taken at the patient’s previous appointment. Doing this job is the next task she hopes computer models will take over.

“These are the sorts of things that we can also teach a model, but more importantly we allow the model to teach itself,” she says. That’s the power of artificial intelligence — it’s not simply automating rules that the researchers provide but also creating its own rules.

“The optimist in me says in three years we can train this tool to read mammograms as well as an average radiologist,” she says. “So we’ll see. That’s what we’re working on.”

This is an area that’s evolving rapidly. For example, researchers at Radboud University Medical Center in the Netherlands spun off a company, ScreenPoint Medical, that can read mammograms as well as the average radiologist now, says Ioannis Sechopoulos, a radiologist at the university who ran a study to evaluate the software.

“A very good breast radiologist is still better than the computer,” Sechopoulos says, but “there’s no theoretical reason for [the software] not to become as good as the best breast radiologists in the world.”

At least initially, Sechopoulos suggests, computers could identify mammograms that are clearly normal. “So we can get rid of the human reading a significant portion of normal mammograms,” he says. That could free up radiologists to perform more demanding tasks and could potentially save money.

Sechopoulos says the biggest challenge now isn’t technology but ethics. When the algorithm makes a mistake, “then who’s responsible, and who do we sue?” he asks. “That medical-legal aspect has to be solved first.”

Lehman sees other challenges. One question she’s starting to explore is whether women will be comfortable having this potentially life-or-death task turned over to a computer algorithm.

“I know a lot of people say … ‘I’m intrigued by [artificial intelligence], but I’m not sure I’m ready to get in the back of the car and let the computer drive me around, unless there’s a human being there to take the wheel when necessary,’ ” Lehman says.

She asks a patient, Susan Biener Bergman, a 62-year-old physician from a nearby suburb, how she feels about it.

Bergman agrees that giving that much control to a computer is “creepy,” but she also sees the value in automation. “Computers remember facts better than humans do,” she says. And as long as a trustworthy human being is still in the loop, she’s OK with empowering an algorithm to read her mammogram.

Lehman is happy to hear that. But she’s also mindful that trusted technologies haven’t always been trustworthy. Twenty years ago, radiologists adopted a technology called CAD, short for computer-aided detection, which was supposed to help them find tumors on mammograms.

“The CAD story is a pretty uncomfortable one for us in mammography,” Lehman says.

The technology became ubiquitous due to the efforts of its commercial developers. “They lobbied to have CAD paid for,” she says, “and they convinced Congress this is better for women — and if you want your women constituents to know that you support women, you should support this.”

Once Medicare agreed to pay for it, CAD became widely adopted, despite misgivings among many radiologists.

A few years ago, Lehman and her colleagues decided to see if CAD was actually beneficial. They compared doctors at centers that used the software with doctors at those that didn’t to see who was more adept at finding suspicious spots.

Radiologists “actually did better at centers without CAD,” Lehman and her colleagues concluded in a study. Doctors may have been distracted by so many false indications that popped up on the mammograms, or perhaps they became complacent, figuring the computer was doing a perfect job.

Whatever the reason, Lehman says, “we want to make sure as we’re developing and evaluating and implementing artificial intelligence and deep learning, we don’t repeat the mistakes of our past.”

That’s certainly on the mind of Joshua Fenton, a family practice doctor at the University of California, Davis’ Center for Healthcare Policy and Research. He has written about the evidence that led the FDA to let companies market CAD technology.

“It was, quote, ‘promising’ data, but definitely not blockbuster data — definitely not large population studies or randomized trials,” Fenton says.

The agency didn’t foresee how doctors would change their behavior — evidently not for the better — when using computers equipped with the software.

“We can’t always anticipate how a technology will be used in practice,” Fenton says, so he would like the FDA to monitor software like this after it has been on the market to see if its use is actually improving medical care.

Those challenges will grow as algorithms take on ever more tasks. And that’s on the not-so-distant horizon.

Lehman and Barzilay are already thinking beyond the initial reading of mammograms and are looking for algorithms to pick up tasks that humans currently can’t perform well or at all.

One project is an algorithm that can examine a high-risk spot on a mammogram and provide advice about whether a biopsy is necessary. Reducing the number of unnecessary biopsies would reduce costs and help women avoid the procedure.

In 2017, Barzilay, Lehman and colleagues reported that their algorithm could reduce biopsies by about 30 percent.

They have also developed a computer program that analyzes a lot of information about a patient to predict future risk of breast cancer.

The first time you go and do your screening, Barzilay says, the algorithm doesn’t just look for cancer on your mammogram — “the model tells you what is the likelihood that you develop cancer within two years, three years, 10 years.”

That projection can help women and doctors decide how frequently to screen for breast cancer.

“We’re so excited about it because it is a stronger predictor than anything else that we’ve found out there,” Lehman says. Unlike other tools like this, which were developed by examining predominantly white European women, it works well among women of all races and ages, she says.

Lehman is mindful that an algorithm developed at one hospital or among one demographic might fail when tried elsewhere, so her research addresses that issue. But potential pitfalls aren’t what keep her up at night.

“What keeps me up at night is 500,000 women [worldwide] die every year of breast cancer,” she says. She would like to find ways to accelerate progress so that innovations can help people sooner.

And that imperative calls for more than new technology, she says — it calls for a new philosophy.

“We’re too fearful of change,” she says. “We’re too fearful of losing our jobs. We’re too fearful of things not staying the way they’ve always been. We’re going to have to think differently.”

You can contact NPR Science correspondent Richard Harris at rharris@npr.org.

9(MDAxODM0MDY4MDEyMTY4NDA3MzI3YjkzMw004))